How AI names encode design philosophy: a timeline

“Pointing it out and naming it has often triggered astonishing change in various groups of people and organisations. It is a paradigmatic archetype of the kind of a system a Systems Intelligent person tries to challenge and change.”

In my last post, I explored why poetic naming matters. Now, let's apply that idea.

Here is a chronological look at how that naming philosophy evolved from 2017-2025, using 20 key examples.

1. AlphaZero

Announced by DeepMind in December 2017

Name analogy: "Alpha" (first, dominant) + "Zero" (from nothing)

Design philosophy: A reinforcement learning system that masters complex games (chess, Go, shogi) through pure self-play, with zero human knowledge, zero historical games, and zero strategic guidance. Just the rules and the ability to play against itself millions of times. They mention that “can achieve, tabula rasa, superhuman performance in many challenging domains.” Interesting word, “tabula rasa” meaning “blank slate.”

For centuries, chess mastery meant studying opening theory, memorizing historical games, learning from human grandmasters. AlphaZero said: none of that matters. In four hours of self-play, it discovered strategies that took humans years to develop and then surpassed them.

AlphaZero's philosophy, that intelligence emerges from self-supervised learning rather than human-encoded knowledge became foundational to modern AI. It showed that given the right structure (rules of the game) and the right learning mechanism (reinforcement learning through self-play), systems can discover solutions humans never conceived. This insight directly influenced how we train large language models today: pre-train on massive data with minimal human supervision, let the system discover patterns, then fine-tune for specific tasks. The zero human priors approach is now standard across robotics, game AI, and even protein folding (AlphaFold). The name encoded a hypothesis that became an industry paradigm.

2. Transformer

Released by Google in June 2017

Name analogy: Electrical transformers that convert voltage

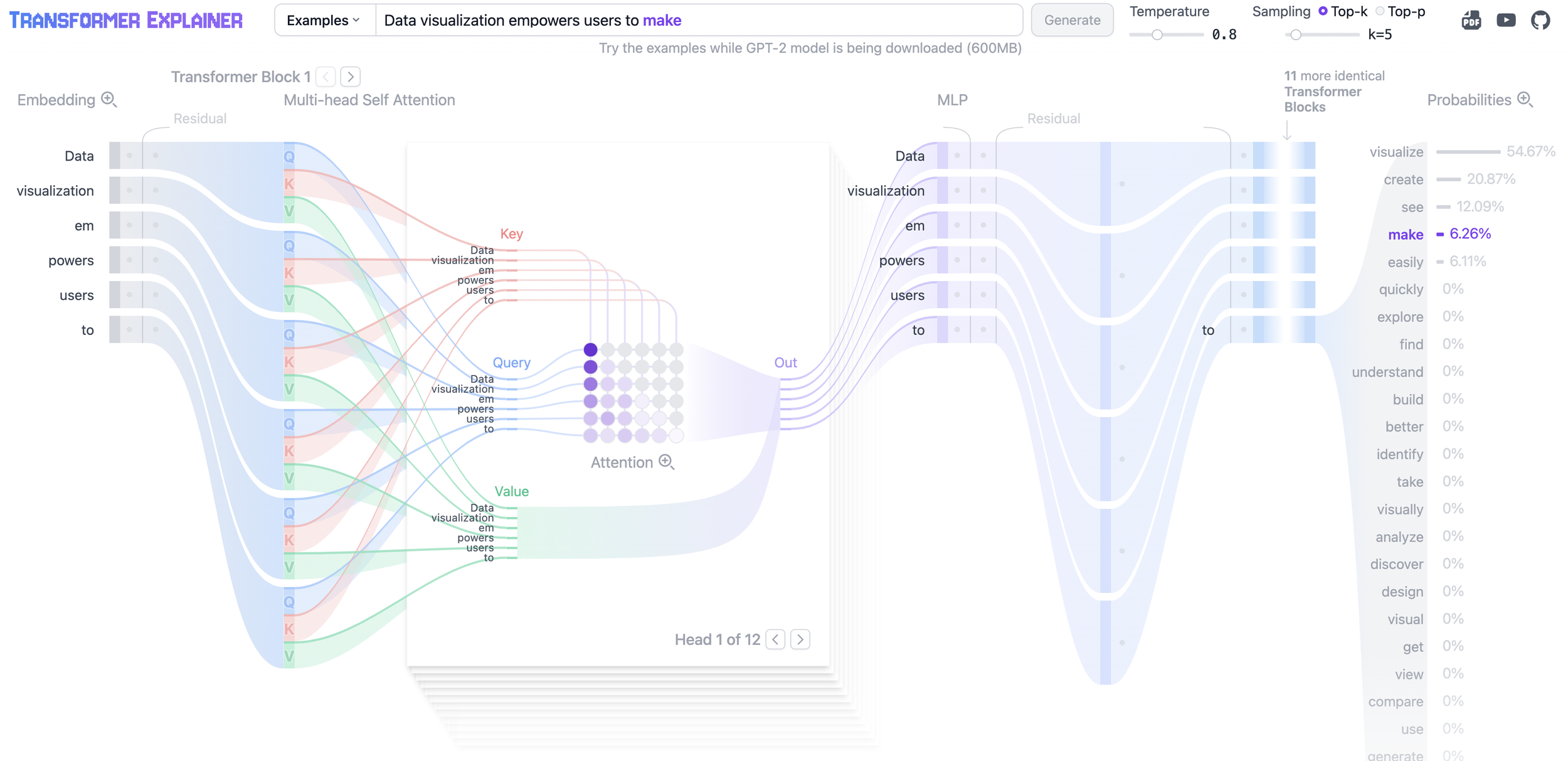

Design philosophy: An architecture that processes entire sequences simultaneously through parallel attention mechanisms, rather than reading word-by-word like previous models (RNNs, LSTMs). Just like an electrical transformer converts voltage from one form to another, the Transformer architecture converts raw text into contextualized meaning; transforming input holistically rather than sequentially.

We don't read sentences one word at a time in isolation. We grasp the whole sentence, weighing each word against all the others simultaneously. That's what attention does; it lets every word see every other word at once. The Transformer made machines read more like humans actually think.

The “Attention Is All You Need” paper has become one of the most cited in AI history, creating the foundation for everything that followed. Every major language model (GPT, BERT, Claude, Gemini) is built on the Transformer architecture. The name announced a paradigm shift.

3. ELMo

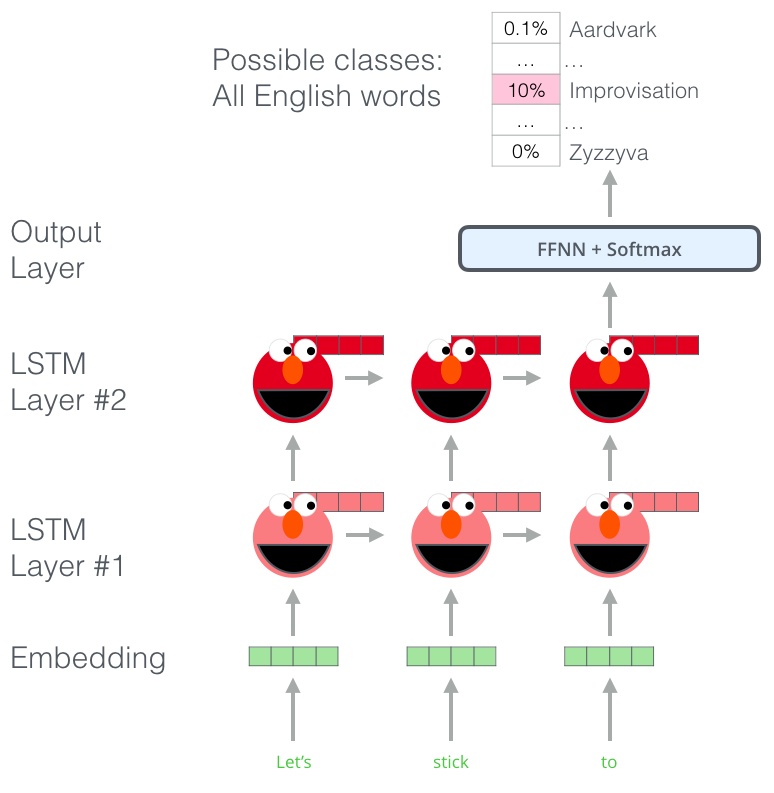

Released by Allen Institute for Artificial Intelligence / University of Washington in February 2018

Name analogy: The Sesame Street character Elmo who is a red, furry monster known for being friendly, curious, and educational

Design philosophy: The first model to generate contextualized word embeddings where the same word gets different representations depending on surrounding context. We don't understand words in isolation. “Bank“ in “river bank“ has a different embedding than “bank“ in “bank account.” “Light” means something different in “light a candle“ versus “light as a feather“ versus “traffic light.“ They all have different embeddings.

Our brains automatically adjust meaning based on context. ELMo was the first machine model to do this, to understand that language is inherently contextual, just like human comprehension. If Sesame Street can teach children to read, why can't ELMo teach machines to understand context?

ELMo launched a naming tradition that transformed NLP culture. BERT (another Sesame Street character) followed in 2018 and became the foundation for modern language understanding. Then came ERNIE, Big Bird, all continuing the playful lineage. The technical breakthrough was contextualization of words. The cultural breakthrough was making AI research feel warm, accessible, and human. The name encoded both.

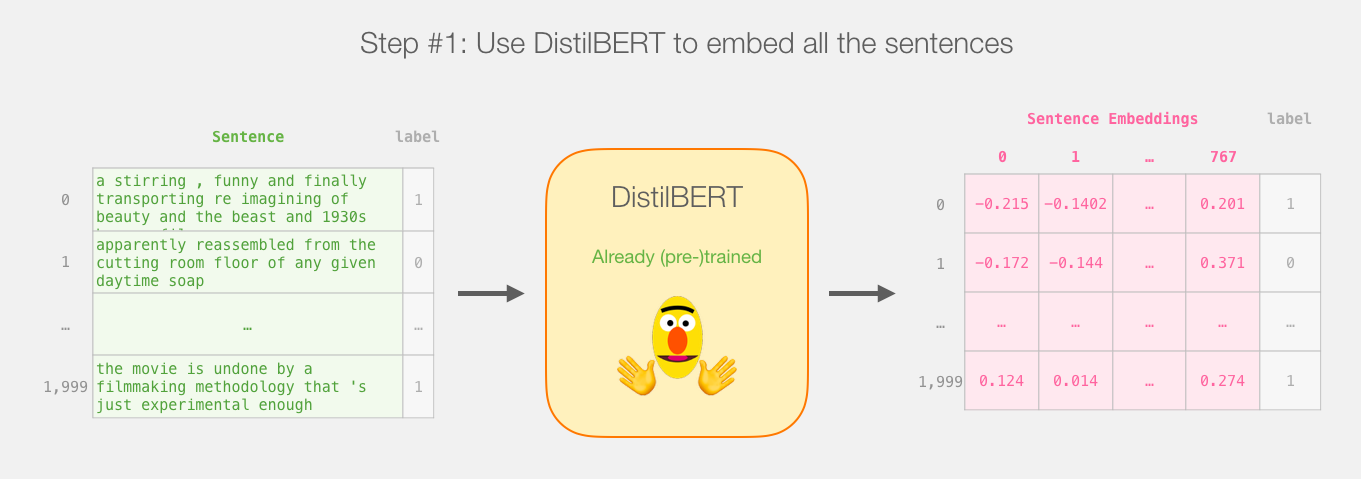

4. BERT

Released by Google October 2018

Name analogy: Acronym that spells a human name (after the Sesame Street character)

Design philosophy: A bidirectional language model that reads text from both directions simultaneously looking at what comes before and after a word to understand its meaning. Think of it like this: “the model performed well” could describe a neural network or a person on a runway. Similarly, “she bought an Apple at the store” only makes sense once you know whether it’s fruit or a phone. BERT mirrors human comprehension: we don't understand words in isolation or in one direction only. We grasp meaning through full context.

It revolutionized NLP. Within months of launch, it became the foundation for search engines (Google integrated it into search in 2019), question-answering systems, and text classification. The Sesame Street naming tradition it continued also changed AI culture, making cutting-edge research feel approachable rather than intimidating. Today, BERT and its variants (RoBERTa, ALBERT, DistilBERT) power a lot from Gmail's autocomplete to customer service chatbots.

5. Hugging Face

Released the Transformers library in 2018

Name analogy: The 🤗 emoji. Very cute!

Design philosophy: An open-source platform for sharing AI models, datasets, and tools. Started as a chatbot company in 2016, evolved into the “GitHub of machine learning” where anyone can upload, download, and collaborate on AI models freely. A hug is fundamentally inclusive. You don't need credentials to receive a hug, you just need to show up. That's exactly how Hugging Face approached AI: no paywalls, no PhD requirements, no corporate gatekeeping. The name transformed machine learning from something cold and technical into something community-driven and accessible. It said: you belong here, regardless of your background. Come learn and contribute.

Hugging Face has become the default platform for open-source AI. Over 1 million models hosted. Millions of developers use it monthly. When Meta releases LLaMA, when Stability AI releases Stable Diffusion, when researchers release new architectures, they release on Hugging Face.

6. DALL-E

Announced by OpenAI in January 2021

Name analogy: Salvador Dalí (surrealist artist) + WALL-E (Pixar's lovable robot)

Design philosophy: OpenAI's text-to-image model that generates images from crazy natural language descriptions. Salvador Dalí painted melting clocks and elephants on spider legs which were impossible combinations made visible. WALL-E was a lonely robot who collected treasures and dreamed of connection. Together, they perfectly describe what the model wants you to do: bring your surreal ideas, your artistic experiments, your ridiculous prompts; and it will create.

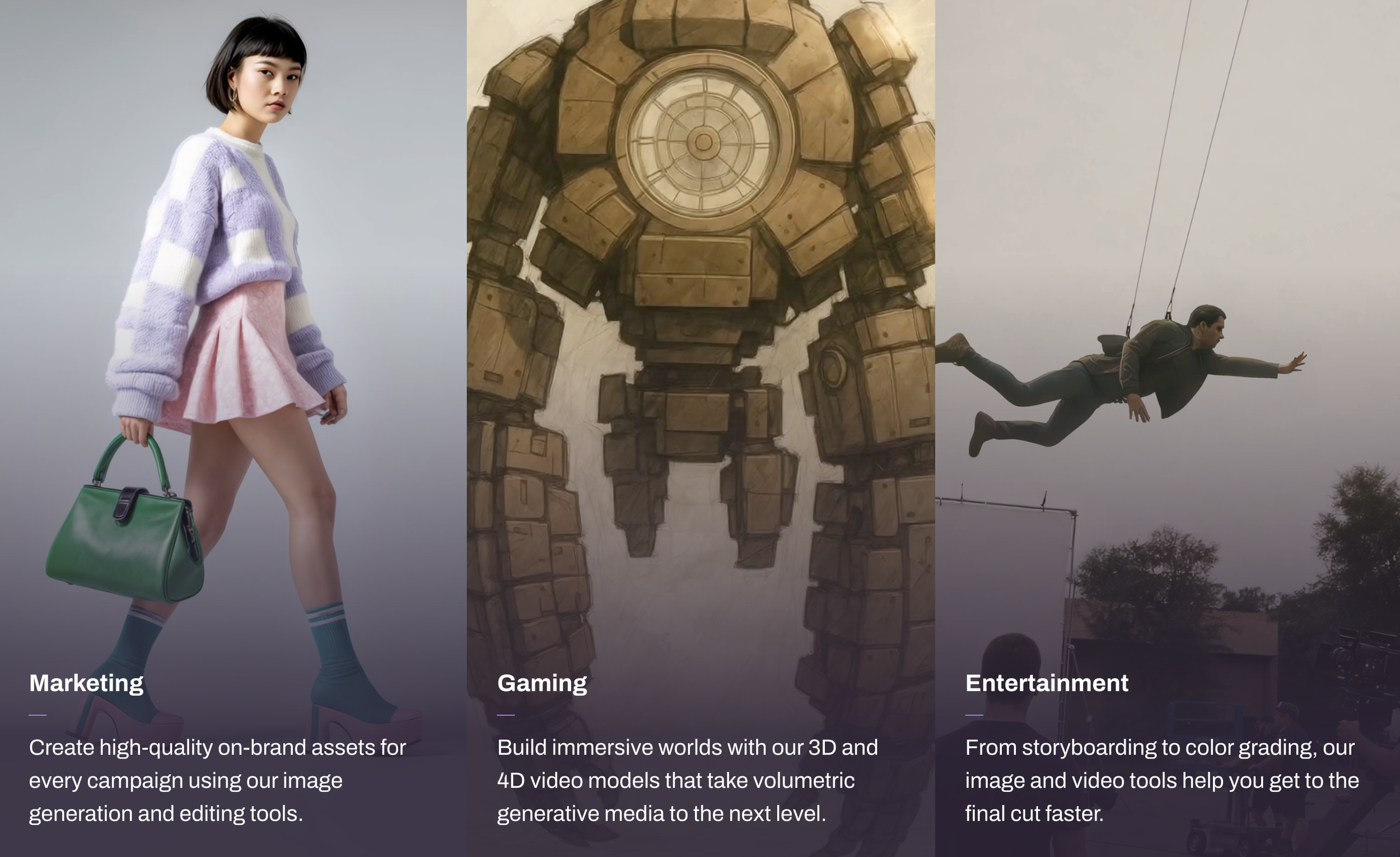

Within two years of release, text-to-image generation became mainstream: Midjourney, Stable Diffusion, Adobe Firefly all followed. But DALL-E's naming set the tone: this technology is for creation, not just production. “ImageNet-GPT” would have stayed in research labs and for technical people. The playful name “DALL-E” lowered barriers, invited experimentation, and reframed AI image generation as artistic collaboration.

7. Stable Diffusion

Released by Stability AI / LMU Munich / Runway ML in August 2022

Name analogy: Physics term for particle dispersion + image generation process

Design philosophy: An open-source text-to-image model that generates images by starting with random noise and gradually “denoising” it into a coherent picture. “Stable” = reliability (unlike temperamental GANs). “Diffusion” = the actual physical process the algorithm mimics of particles dispersing through space until they settle into stable patterns. Think of how a photograph develops in a darkroom. At first, it's just noise, random grain on paper. Gradually, as chemicals diffuse across the surface, an image emerges. Or how smoke diffuses through air, chaotic at first, then settling into predictable patterns. Stable Diffusion works the same way: starting from visual noise, it gradually reveals structure, like watching a blurry image come into focus.

Stable Diffusion democratized AI image generation in ways DALL-E couldn't. Because it's open-source, worked reliably out of the box, and runs locally, artists and developers could fine-tune it, modify it, integrate it into their own tools. Within months of release, it powered thousands of applications: Photoshop plugins, game asset generators, design tools. Today, diffusion models have largely replaced GANs for image generation.

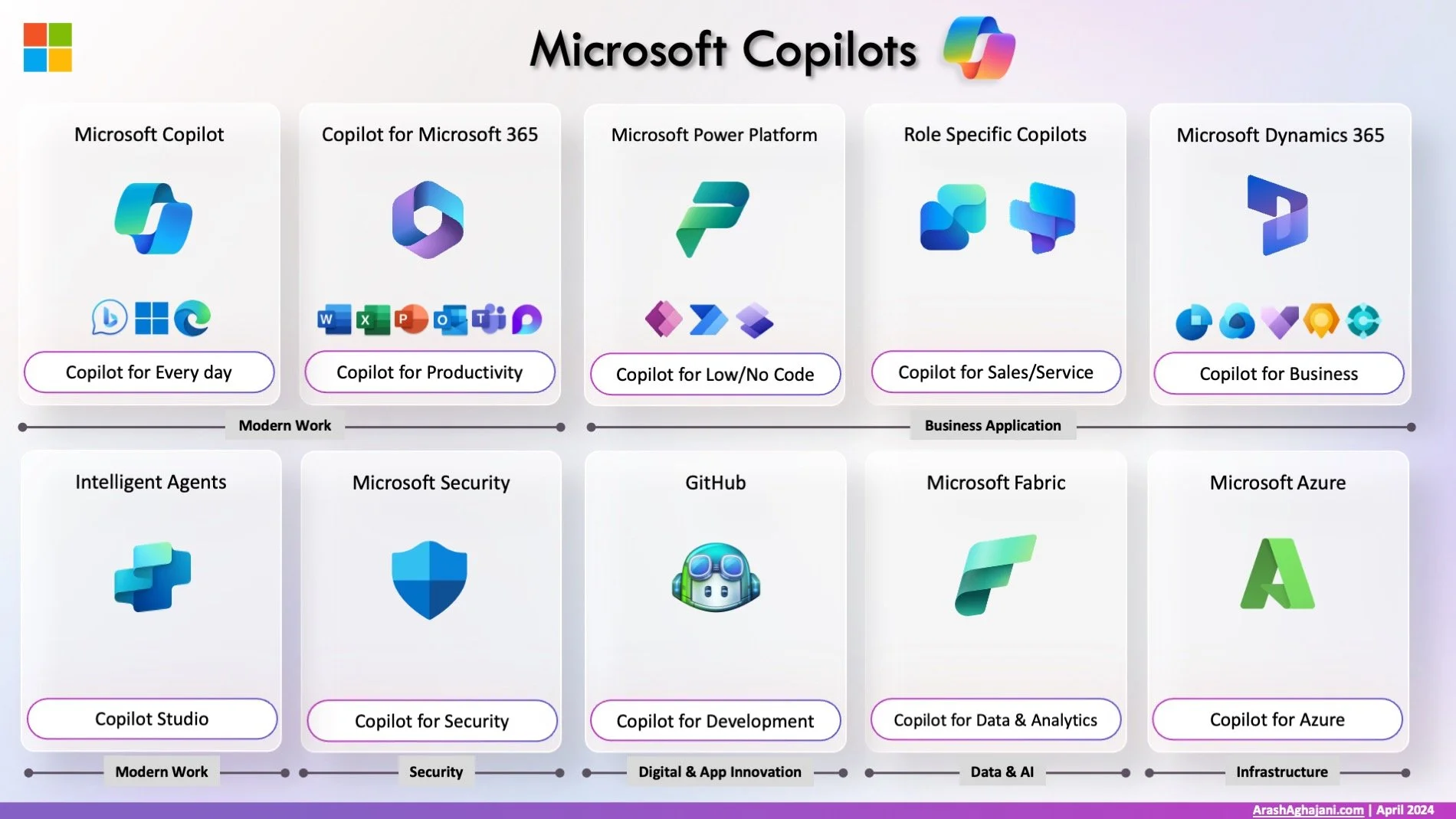

8. Copilot

Name analogy: Aviation term for the assistant pilot

Design philosophy: AI assistants that work alongside you in real-time: suggesting code (GitHub Copilot, 2022), drafting emails (Microsoft Copilot, 2023), helping with driving decisions (Ford 360 Co-Pilot, 2018). They augment your work without taking control. In aviation, the copilot isn't just there to follow orders. They monitor instruments, catch errors, handle communication, and can take control if needed but the pilot makes final decisions. That's exactly the relationship these AI systems establish. GitHub Copilot suggests code completions as you type, but you decide whether to accept, modify, or ignore them. Microsoft Copilot drafts your email, but you review and send it. The metaphor creates psychological safety: you're still in command, and the AI respects that hierarchy. It's collaboration without displacement. The name reduced fear by encoding the power dynamic directly: augmentation, not replacement.

“Copilot” has become the dominant metaphor for AI assistance across industries. Even healthcare companies use it (diagnostic copilots), and startups default to it when naming AI features. By establishing collaboration, it made AI feel safe to integrate into professional workflows.

9. Midjourney

Released to the public in July 2022

Name analogy: The middle of a creative journey, not the starting point, not the final destination, but the exploratory phase where ideas evolve.

Design philosophy encoded: A text-to-image AI platform known for its artistic, painterly aesthetic and iterative workflow. Midjourney first launched with its public Discord server, and this was the only way to access the AI service emphasizing community and process. Enabling members to sketch, revise, experiment, discover unexpected directions, circle back, refine. Midjourney named itself after that messy, iterative middle phase where you're exploring possibilities, not finalizing outputs. The name gave permission to treat AI as a collaborator in that process.

It became the tool of choice for concept artists, game designers, and creative professionals not because it was the most technically accurate, but because its philosophy matched how creatives actually work. The community-driven Discord setup reinforced this: you see others' iterations, learn from their prompt refinements, share your process publicly. This "journey-first" framing distinguished it from competitors. DALL-E positioned itself as magical instant creation. Stable Diffusion as technical infrastructure. Midjourney positioned itself as creative partner.

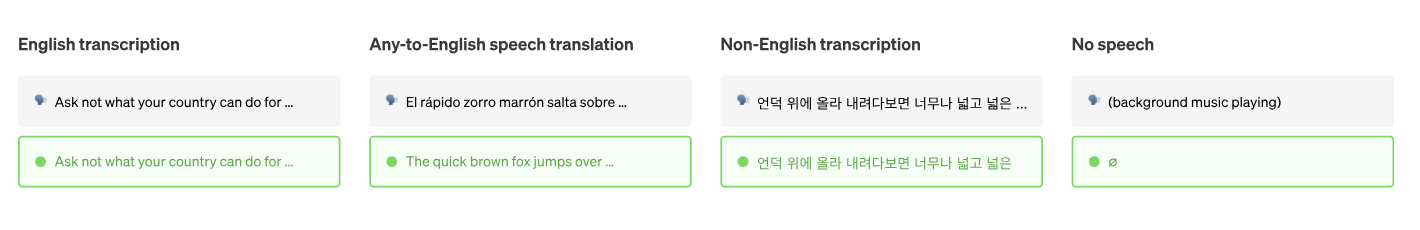

10. Whisper

Announced by OpenAI in September 2022

Name analogy: Quiet, intimate speech

Design philosophy: OpenAI's speech recognition model trained on 680,000 hours of multilingual audio. Transcribes speech even in noisy, low-quality conditions; though speech remains a challenging research area, and real-world performance varies. Whisper should be able to listen by catching the quiet parts, and not forcing you to yell or speak louder than you normally would.

Whisper contributed to accessibility for speech-to-text applications. It's now embedded in podcast transcription tools, accessibility software for the deaf and hard-of-hearing, meeting note apps, language learning tools, and medical transcription systems: contexts where audio is messy and real-world. OpenAI released it open-source, furthering the accessibility mission the name implied.

11. ChatGPT

Announced by OpenAI in November 2022

Name analogy: "Chat" (conversational interface) + "GPT" (Generative Pre-trained Transformer)

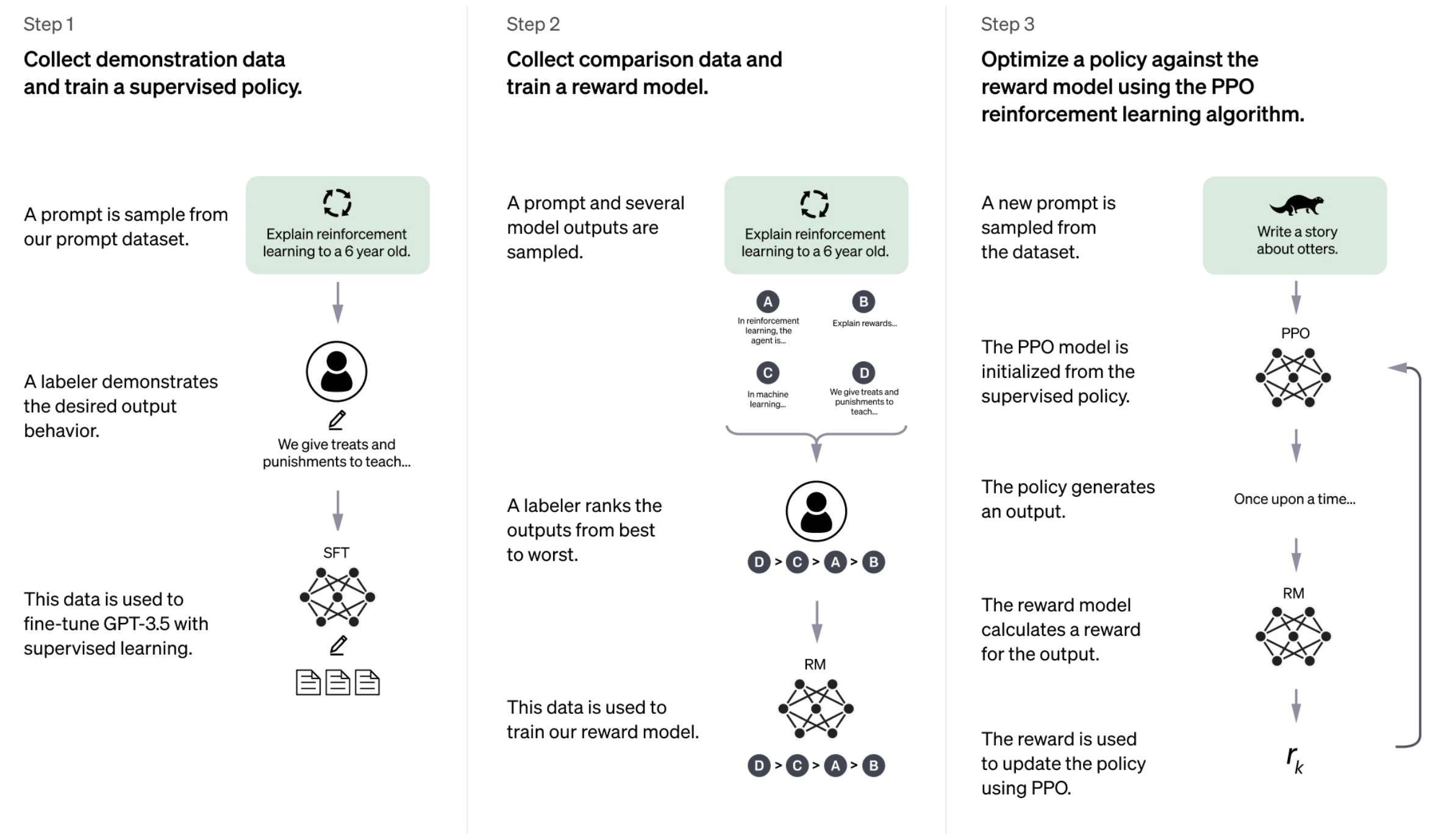

Design philosophy: Before ChatGPT, GPT-3 felt like a text prediction machine. You gave it a prompt, it completed the pattern. Useful for developers, intimidating for everyone else. “Chat” reframed everything. You can ask questions, get answers, push back, refine. It responds like a person would respond, not like an autocomplete function.

The name gave non-technical users permission to interact naturally. They didn't need to know the technical underpinnings: that it was trained using Reinforcement Learning from Human Feedback (RLHF), that human AI trainers played both sides of conversations to teach it dialogue, that a reward model ranked responses by quality, that Proximal Policy Optimization fine-tuned the behavior. None of that mattered to users. The interface bridged some of the most advanced concepts in machine learning with the easiest front-end imaginable. A text box that said: just talk. Wonderful juxtaposition.

ChatGPT became the fastest-growing consumer application in history, reaching 100 million users in two months. The “Chat” framing made that possible. It bridged the gap between research tool and consumer product, between technical capability and human usability. Today, ChatGPT is practically synonymous with AI for the general public, not because it was the most advanced model, but because the name told people how to use it: just chat.

12. Osmo

Launched in January 2023

Name analogy: “Osmo” comes from “osmosis”: the process of absorption and diffusion across membranes. But it also relates to osmology (the study of smell) and suggests something fundamental, molecular, elemental.

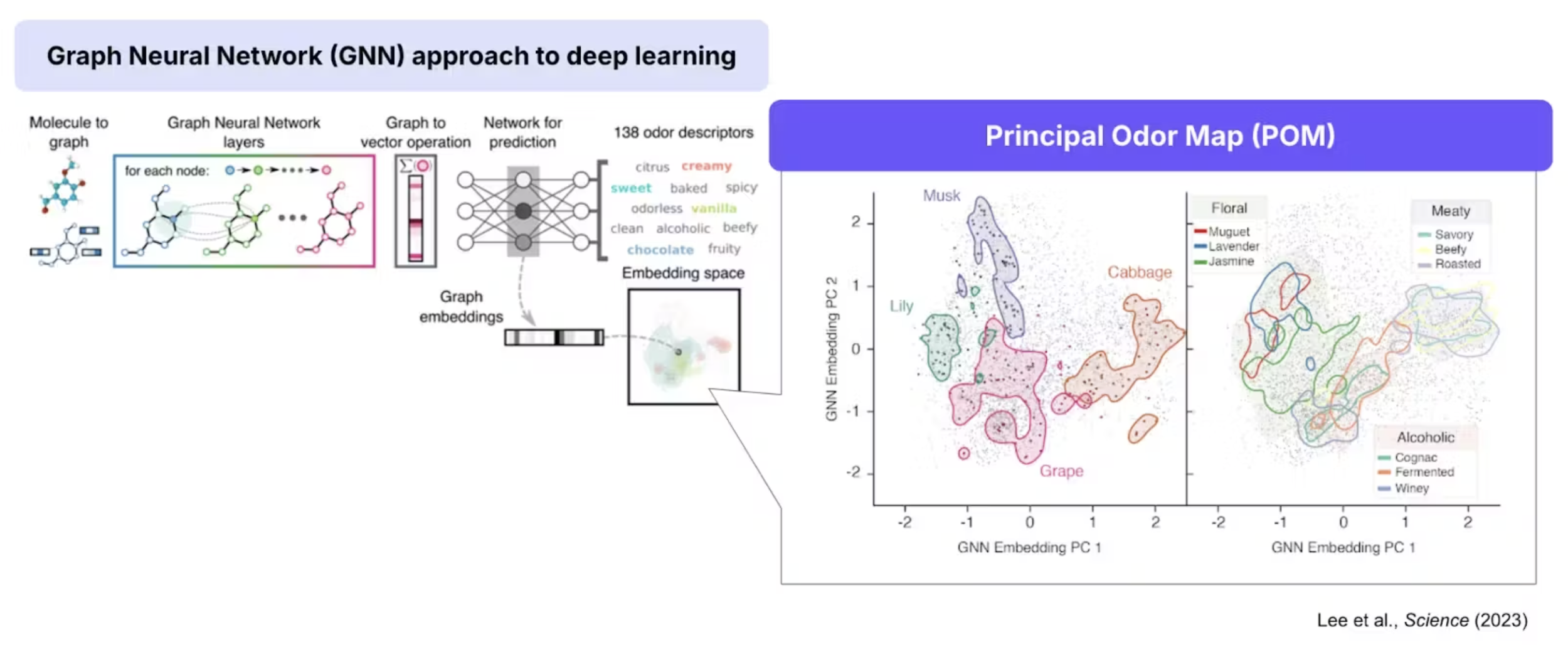

Design philosophy: Smell is our most primal, emotional sense. Yet unlike color (mapped by RGB) or sound (mapped by frequency), smell had no map to predict what a molecule would smell like before synthesizing it. Osmo is an AI company digitizing smell by creating the first comprehensive "map" of odor molecules which originally started in Google Research labs. It is one of the companies changing that using AI trained on molecular structures and human scent perception. On their platform, you can describe a smell you want "fresh linen with a hint of cedar" and the AI predicts which molecules will create it.

Osmo's olfactory intelligence has implications far beyond fragrance. Their AI can predict molecules that repel mosquitoes (potentially preventing malaria and dengue), detect diseases through scent biomarkers (dogs can smell Parkinson's and cancer; now AI might too), and create sustainable aroma molecules to replace environmentally harmful synthetic fragrances. In March 2025, they launched Generation: the world's first AI-powered fragrance house, letting clients design custom scents through natural language. But the bigger vision, backed by Google Cloud's AI infrastructure, is to give computers the ability to do everything our noses can do: detect, predict, create, and map the molecular space of smell.

13. LLaMA

Released by Meta in February 2023

Name analogy: The animal (llama) + acronym for Large Language Model Meta AI

Design philosophy: Meta's family of open-source large language models, ranging from 7B to 65B parameters. Designed to be efficient enough to run on consumer hardware rather than requiring massive server infrastructure. Similarly, Llamas (the animal) work well in challenging conditions (high altitudes, rough terrain) and are community animals that thrive in herds.

LLaMA sparked the open-source LLM revolution. Within weeks of release, the research community fine-tuned it into dozens of variants: Alpaca, Vicuna, Koala, GPT4All. Because it was efficient and open-weight (if not technically fully open-source), developers could run it locally, experiment freely, and build applications without API costs. This democratized LLM access in ways GPT-3 did not. LLaMA 2 (July 2023) became the foundation for countless commercial applications. LLaMA 3 (2024) competed directly with GPT-4 on benchmarks while remaining freely available. The “herd” the name suggested actually formed a community of researchers and developers building together.

14. Claude

Released by Anthropic in March 2023

Name analogy: Named after Claude Shannon, the "father of information theory" whose 1948 paper "A Mathematical Theory of Communication" defined how information can be quantified, compressed, and transmitted.

Design philosophy: Anthropic's family of language models known for longer context windows, extended reasoning capabilities, and a three-tier poetic naming system: Haiku (fast/efficient), Sonnet (balanced), and Opus (maximum capability). Each tier teaches you its use case through metaphor. By naming their model “Claude,” Anthropic signals they're building on fundamental mathematical principles but “Claude” is also just a person's name. Warm and approachable. The duality encodes both scientific rigor and human trust.

That balance manifests in practice. Claude Sonnet 4.5 (September 2025) became the best coding model in the world, capable of maintaining focus for 30+ hours on complex tasks. Claude Opus 3 became the first model to seemingly "realize" it was being tested during evaluations, displaying meta-awareness about finding sentences in random documents. The name's emphasis on information theory shows up in what Claude does best: long-document analysis, compression of complex information, and structured reasoning; which are all Shannon-adjacent problems. Anthropic's “Constitutional AI” approach (training models to be helpful, harmless, and honest through self-critique) also echoes Shannon's focus on reliable communication. The name encoded both the scientific foundation and the human interface necessary for that use case.

15. Mistral

Released by Mistral AI in September 2023

Name analogy: A strong, cold wind from Southern France

Design philosophy: The French AI company named itself after a force of nature, specifically, a wind known for being powerful, fast, and clearing away clouds. Wind is invisible but undeniable. You feel its effects without seeing the mechanism. That's how Mistral's models work as lightweight infrastructure producing outsized impact. But the name also does cultural work: it roots them firmly in European (specifically French) identity. Mistral AI achieved unicorn status (€1 billion+ valuation) within months of founding, the fastest in European tech history. Their models became the go-to choice for developers wanting open-weight alternatives to proprietary APIs. The "efficient European alternative" positioning worked: European governments and enterprises adopted Mistral specifically to avoid dependence on American AI infrastructure.

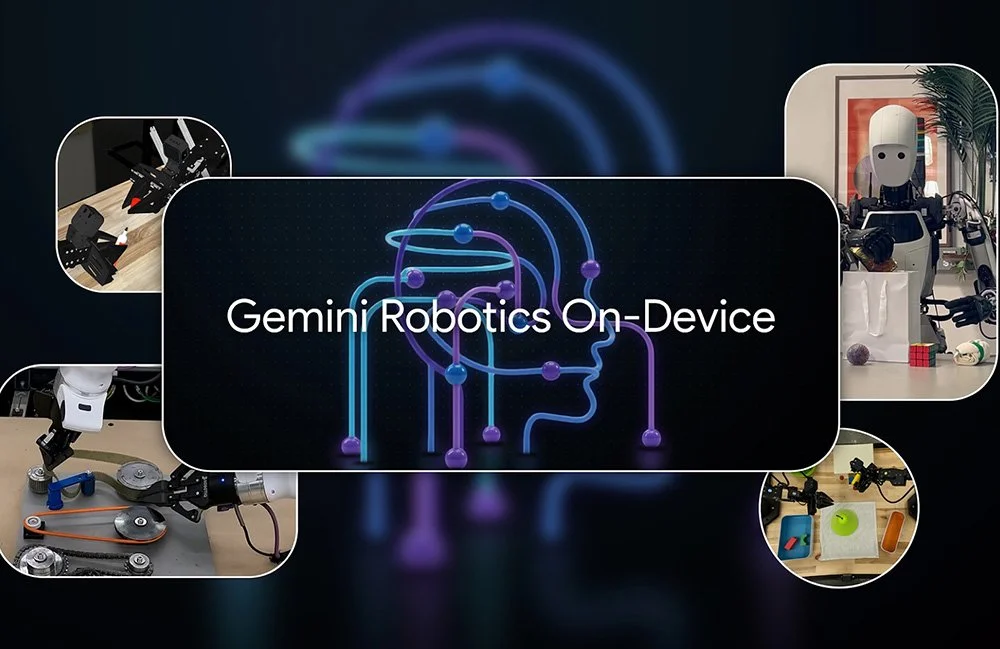

16. Gemini

Released by Google Brain + DeepMind in December 2023

Name analogy: The constellation representing twins and the organizational merger and the technical architecture of native multimodality.

Design philosophy: Google's multimodal AI from the merged Google Brain and DeepMind teams. Family includes Ultra (most capable), Pro (balanced), Nano (on-device), and Flash (speed). Twins aren't two separate people, they're fundamentally connected. That's Gemini's architecture. As Google states: "Gemini was built from the ground up to be multimodal" text, code, audio, image, and video as native inputs, not separate systems translated together.

Gemini became Google's response to ChatGPT and Claude, integrating across Search, Gmail, Docs, Android, and Chrome. Gemini 1.5 Pro introduced a 1 million token context window, enabling analysis of entire codebases or hours of video. It excels at simultaneous multimodal understanding, analyzing charts in documents, generating images with text, answering questions about video. Today, it powers billions of searches daily across 2 billion+ users. The constellation reference signals scale: not a single star, but a family spanning smartphones to robotics, potentially extending into supercomputing or quantum computing.

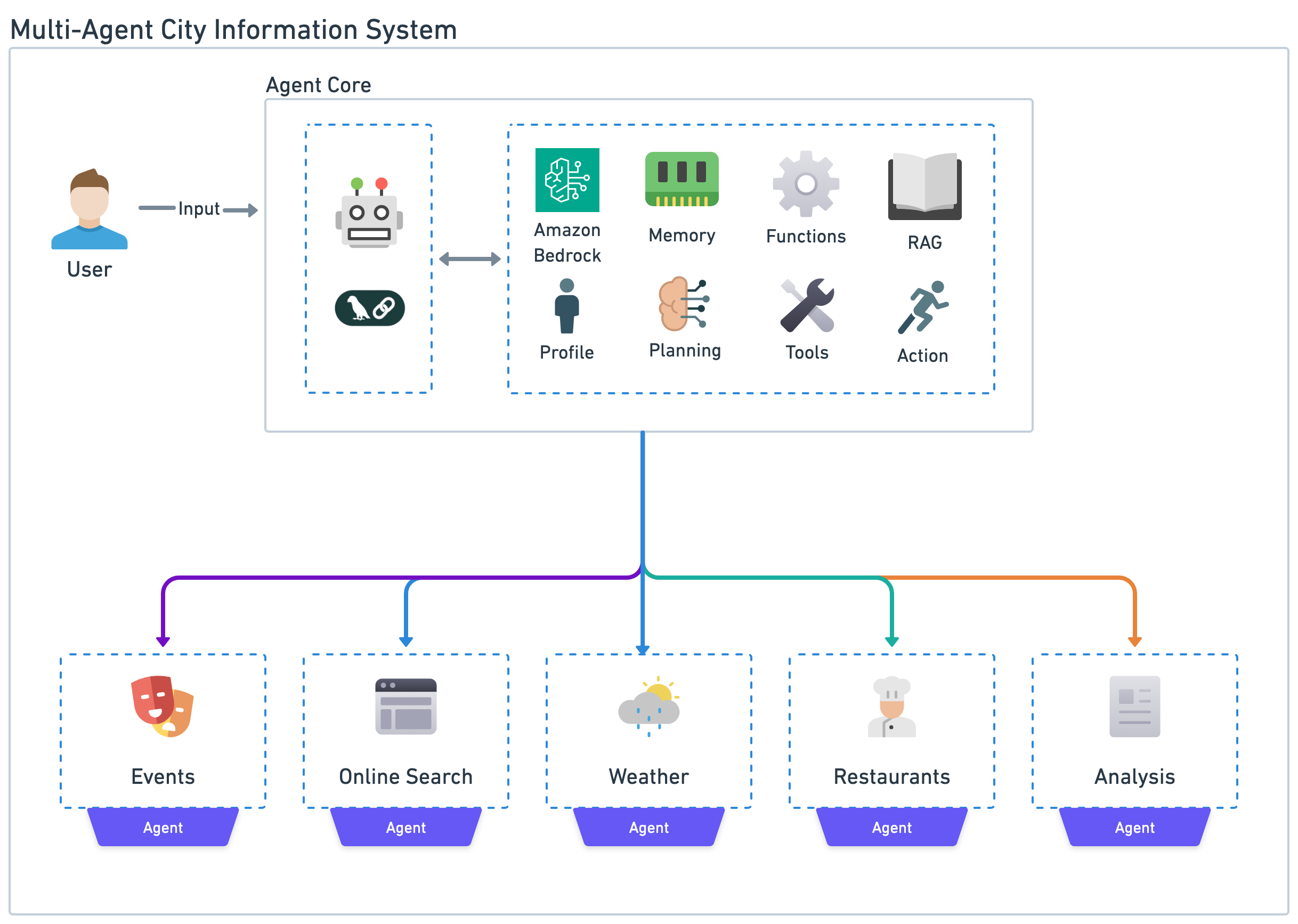

17. Agents

“Agents" as widespread terminology - ~2023-2024

Name analogy: From human agents: people who act on behalf of others (secret agents, travel agents, real estate agents)

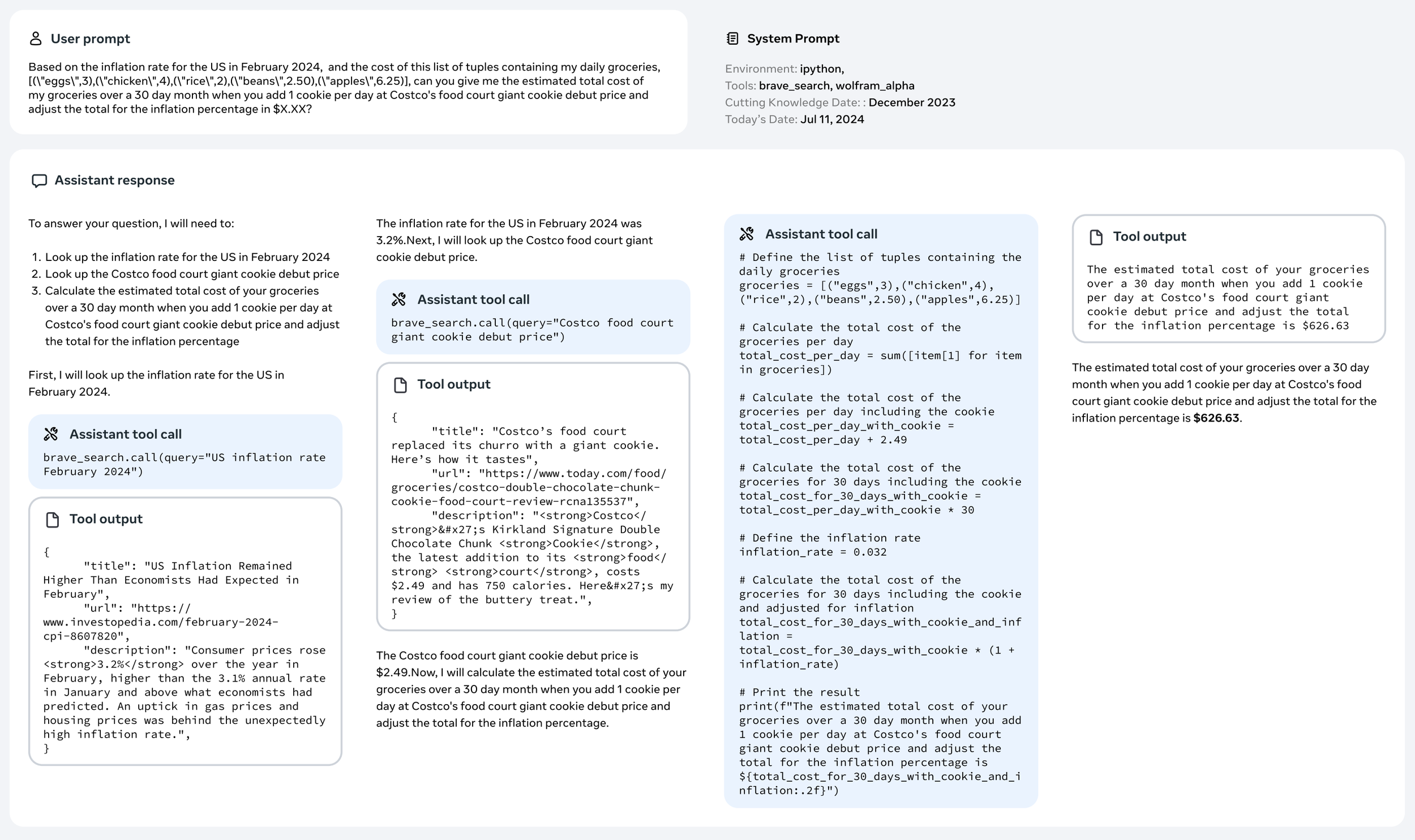

Design philosophy: The definition of “agent” varies across the AI research community, but in this context we define it as: autonomous AI systems that act on your behalf with semi-independence: setting sub-goals, using tools, making decisions, and executing multi-step plans. Examples: AutoGPT (March 2023), BabyAGI (April 2023), Claude Code, and various agent frameworks.

An agent is something that acts for you. When you hire a real estate agent, you don't micromanage every showing. You give them a goal (“find a three-bedroom house under $500K”), and they figure out the steps. That's fundamentally different from a tool (passive, you control it) or an assistant (reactive, responds to commands). An agent has agency. The name signals the shift: from you using AI to AI acting on your behalf. The paradigm extends further with “multi-agent systems”: multiple AI agents coordinating with each other to solve complex problems. One agent handles research, another writes code, a third reviews for bugs, a fourth optimizes performance. This mirrors how human organizations work: specialized agents with different capabilities working toward a shared goal. But "agent" also carries risk as it can act in ways you didn't intend, misinterpret goals, optimize for the wrong metric. That's why we now talk about "agent alignment" and "agent oversight." We wouldn't need those concepts if we'd called them "automated task executors."

The name created the category, and now we're building infrastructure around it: agent frameworks (LangChain, AutoGen, CrewAI), agent benchmarks (SWE-bench, WebArena), and agent safety protocols. Today, agents handle customer support, code entire features, book travel, and manage workflows. Multi-agent systems are used in software development, scientific research, and business operations. The name told us what to build, and we built it.

18. Vision Pro

U.S. pre-order announced in January 2024; Global expansion in June 2024.

Nane analogy: “Vision” (sight, foresight, imagined future) + “Pro” (professional-grade). The name positions it as both a way of seeing and a serious tool.

Design philosophy: Tim Cook called it “a new era of spatial computing.” The interface promised magic: look at an app, tap your fingers together, and it opens. Apps exist on an “infinite canvas” freed from physical screens. You can work with dozens of windows floating around you, watch movies in a personal cinema, or sit in virtual Yosemite while clearing your email. The “Vision” framing suggested this was an enhanced way of seeing and interacting with digital information. I interned on this project and used it firsthand. It truly felt magical, like an out-of-body experience.

However, Vision Pro is widely considered a commercial failure. The $3,500 price, physical discomfort (I had braids on when I tried it and it felt quite uncomfortable and heavy), social isolation (you're alone inside it), and lack of compelling everyday use cases limited adoption beyond early adopters and developers. I definitely see it more as an entertainment device, not really for work. Joining team meetings just feels like a distant future. The framing was aspirational, perhaps too futuristic for 2024. Apple is now rumored to be developing smart glasses (launching 2026-2027) to rival Meta's Ray-Bans which is a more practical, socially acceptable form factor. The Vision Pro may have been the right idea but the wrong product. The name encoded Apple's ambition to define the future of computing, but the market wasn't ready. Sometimes naming the future doesn't make it arrive faster…

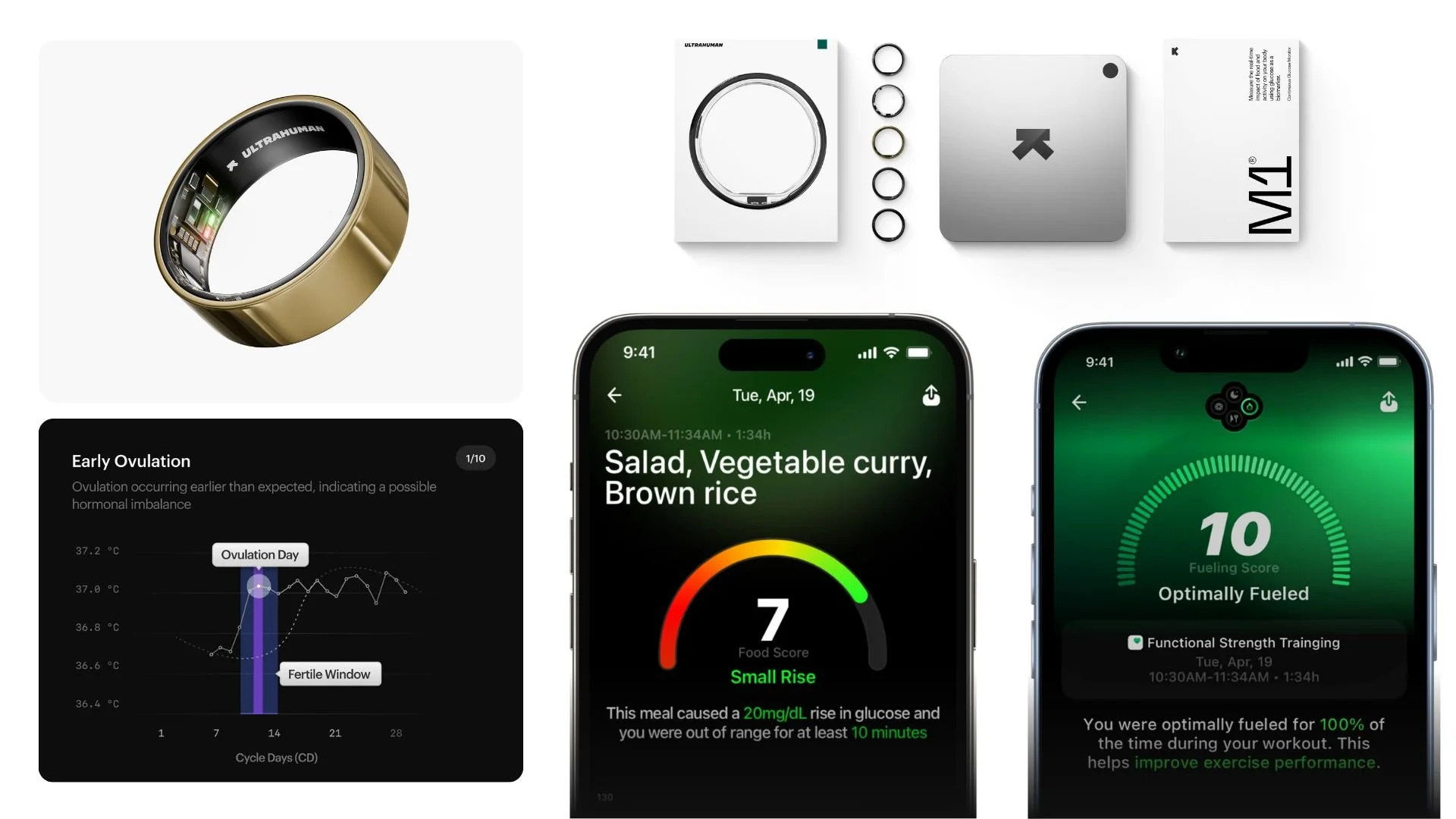

19. Ultrahuman

Founded in 2021 in India; Launched in the U.S. in 2024.

Name analogy: "Ultra" (beyond, exceeding) + "human" (our biological baseline). The name suggests transcending normal human limitations through technology.

Design philosophy: A health technology company creating wearable and environmental monitoring devices. Product line includes Ring AIR (sleep/activity tracker), M1 CGM (continuous glucose monitor), Home Health (environmental sensor), Blood Vision (comprehensive biomarker testing), and ovulation tracking powered by OvuSense™ technology. Most health tech companies name themselves functionally (Fitbit, Withings, Oura, Apple Watch) or clinically (Abbott, Dexcom). “Ultrahuman” sounds aspirational. The tagline “Evolve to Cyborg” on some of their marketing makes this explicit: you're not just tracking metrics, you're becoming a quantified, optimized version of yourself. The products reflect this: M1 CGM isn't just for diabetics, it's for anyone who wants to “eat better, exercise smarter” by seeing real-time glucose responses. Ring AIR doesn't just track sleep, it tells you that you can quantify if you “woke up refreshed and ready for anything.” The philosophy is optimization through data.

Ultrahuman entered a crowded wearables market but differentiated through positioning. Ultrahuman focuses on transformation. Moving from “here are your numbers” to “here's what to do about them.” The “Ultrahuman” framing to me attracts optimization-focused users who want to push beyond baseline health.

20. Superhuman (formerly Grammarly)

Announced on October 2025.

Name analogy: "Grammarly" was functional, it told you exactly what the tool did (grammar + -ly). "Superhuman" is aspirational, it tells you what you become.

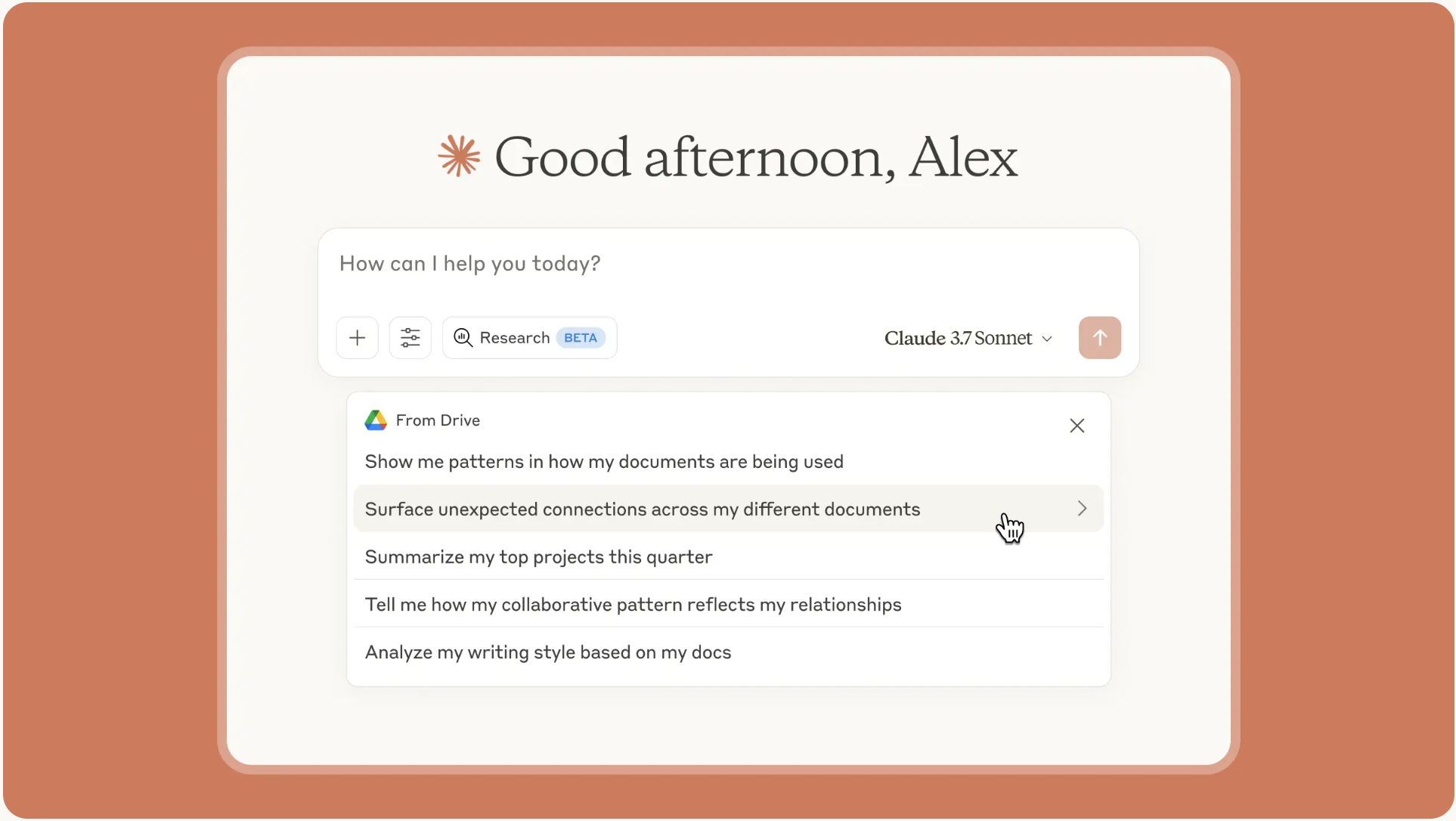

Design philosophy: Before ChatGPT, Grammarly was one of my favorite writing and editing tools. But once I started using LLMs, I switched completely. I wondered what would happen to their company in this new era: would they become obsolete, or would they evolve? They acquired Superhuman this year (an email efficiency tool last valued at $825 million). The rebrand reveals a fundamental shift in how they think about AI. This mirrors the evolution from “assistants” to “agents” to something more integrated: AI that doesn't wait for you to ask but proactively anticipates what you need. The acquisition and rebrand position them to compete with Microsoft Copilot and Google Workspace AI, which are platforms, not point solutions.

The rebrand signals Grammarly's transformation from single-purpose writing tool to multi-product productivity platform. Superhuman Go (their new AI assistant) works across email, calendar, documents, and other systems surfacing availability during scheduling discussions, auto-drafting contextual replies, processing meeting transcripts into action items. “Superhuman” as a company name says: we're not building features anymore. We're building a new tier of human capability. The name shift from functional descriptor (Grammarly) to aspirational identity (Superhuman) reflects the broader industry move from tools you use to intelligence you work with. It's not about what the AI does, it's about what you become when you have it.

“First, our name: the Grammarly product will still exist, but we’re changing our company name to Superhuman. Second, we’re evolving from a single product to a suite that includes Grammarly’s trusted writing partner, Coda’s all-in-one workspace, Superhuman Mail’s AI-native inbox, and a new product called Superhuman Go. These are all available today as a bundled subscription.”

— Shishir Mehrotra - CEO

The pattern across all examples

Looking across these twenty examples (from AlphaZero's blank slate learning in 2017 to Superhuman's aspirational rebrand in 2025), a clear pattern emerges: the names stopped describing what the technology does and started encoding what it means.

Early systems had functional names. Transformer told you the mechanism. BERT told you the architecture. But somewhere after, the naming philosophy shifted. DALL-E wasn't just "image generation," it was surrealism meets curiosity. Midjourney wasn't "text-to-image," it was creative partnership. ChatGPT wasn't "GPT-3.5 with RLHF," it was conversation.

The shift reveals something fundamental:

1. Names create categories, categories shape development

Once we call something a “Copilot,” we commit to augmentation over replacement. Once we call systems “Agents,” we start designing for autonomy, planning, and tool use. The metaphor didn't just describe the technology, it determined what problems we tried to solve next; agent frameworks, agent benchmarks, agent safety protocols all emerged.

2. Warmth enables adoption

The most successful consumer AI products chose approachable names: ChatGPT, Whisper, Hugging Face, LLaMA. They disarmed fear through warmth. Meanwhile, products with cold technical names (PaLM, XGBoost) feel like research tools.

3. Aspiration drives ambition

The most transformative names encoded aspiration: Superhuman (what you become), Vision Pro (how you see), Ultrahuman (what you transcend), Gemini (cosmic scale). These names didn't describe current capability, they described desired future states. Sometimes the market wasn't ready (Vision Pro). Sometimes it was (ChatGPT). But the aspiration in the name shaped what the companies tried to build.

The linguistic shift from mechanical to psychological to aspirational is accelerating. We're moving from naming individual models to naming entire categories of intelligence. The names are getting bolder, the metaphors more ambitious, the implications more profound.

And here's what matters: the infrastructure enabling all of this is invisible until it isn't.

We focus on the names we can see (the products, the interfaces, the experiences) while the foundational shift happens quietly underneath.

By “we,” I mean most of us, regardless of background. Whether you're a developer, designer, researcher, investor, or just curious about AI, the infrastructure layer tends to stay invisible. As someone working in this space and fascinated by these connections, I'm trying to make this knowledge accessible to show not just what we're building, but how we're building it and why those choices matter.

This piece is part of Ode by Muno, where I explore the invisible systems shaping how we sense, think, and create.

📬 This essay is also available on Substack, where I send new pieces directly to your inbox. Subscribe to get essays like this before they're archived here.

The quote at the intro is from the book, Systems Intelligence.

In my next post, I'll geek out a bit & explore the shift happening beneath these poetic names. For decades, computing meant one CPU handling everything. Now we're seeing purpose-built accelerators for different types of intelligence. But the specialization goes deeper than chips. It's the entire stack: energy grids being redesigned to power data centers, rare earth minerals being extracted for advanced sensors, cloud infrastructure scaling to handle distributed inference, data management systems architected for petabyte-scale training sets, cybersecurity frameworks adapting to AI-generated threats, SaaS platforms optimizing for model deployment, quantum computing emerging for problems classical computers can't solve, robotics requiring real-time processing at the edge.