Why AI models have poetic names

“Information not fitting with [your] mental model tends to be neglected to ease the handling of the information. Mentality filter is opened by using multiple interpretations, symbols, and metaphors.”

I've been noticing something in AI research papers. The technical language is starting to sound... poetic.

Using metaphor, compression, and symbolism to encode meaning.

I was reading through the evolution of GPT-1 through GPT-5, Claude 1.0 through Sonnet 4.5, Gemini's entire family tree, and I started cataloging the names. Not just to track versions, but because I wanted to understand the differences between each name or sequence.

Anthropic’s poetic tier system

The most poetic LLM series out of the three come from Anthropic:

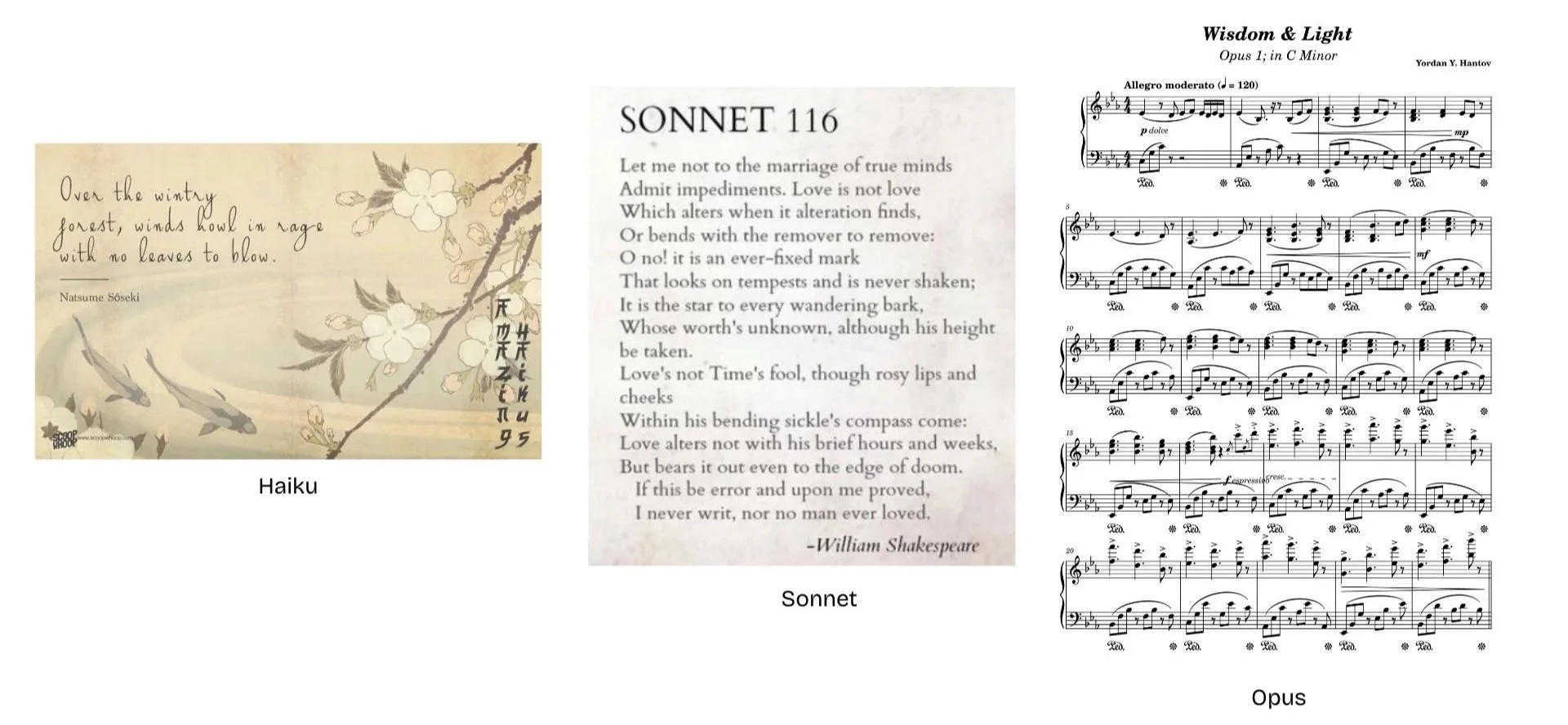

Haiku. Japanese minimalist poetry. 5-7-5 syllables. The whole point is compression: expressing depth with minimal tokens. Claude Haiku 4.5 is currently Anthropic's fastest, cheapest model. Near-instant responses. Lowest cost in the family.

Sonnet. A Shakespearean poetic form with strict structure and rhyme. Fourteen lines, specific meter, constrained but expressive. Claude Sonnet is in the middle of the family with a balance of speed, cost, and capability. Sonnet 3.7 (released February 2025) was the first hybrid reasoning model with rapid responses or extended step-by-step thinking. You can specify your constraint through a “thinking budget” via API up to 128K tokens of reasoning before answering. To put that in perspective: 128K tokens is roughly 96,000 words, or about 300 pages of a standard book. By September 2025, Sonnet 4.5 became the best coding model in the world.

Opus. Latin for “work” or “composition.” Used in music for cataloging; Opus No. 1, Opus No. 2. Opus represents the most powerful model in the family, designed for complex research tasks. Claude Opus 3 (March 2024) had the highest intelligence in the family compared to Sonnet and Haiku. Opus 4.1 in August 2025 became the engine behind Claude Code's workspace.

The metaphors teach you how to use them: Haiku for compression, Sonnet for balance, Opus for mastery.

Examples of artistic references

Different approaches to naming

Claude's poetic naming is the most explicit, but the other major model families tell their own stories through evolution.

OpenAI's GPT series follows a different logic of pure versioning (GPT-1, GPT-2, GPT-3, GPT-4) which is quite unintuitive. But then they introduced variants that reveal some intent: GPT-4o is “omni” (unified across all modalities), and the o1 series which represents “OpenAI 1.” Again, does not mean much.

Google's Gemini takes a different approach entirely named after the constellation, the twins, to symbolize the merger of the Google Brain and DeepMind teams that created the model. Poetic. The family structure mirrors this: Ultra, Pro, Nano, Flash; like astronomical entities of different mass, speed, and brightness.

The shift from mechanical tools to human adjacents

Early machine learning had very mechanical names: principal component analysis, linear regression, random forest, xgboost. Even “random forest” sounds like industrial timber (mis)management. Even “XGBoost” mechanically sounds like it performs better than “random forest.” These belonged to the world of tools. Instruments. Things you calibrate and deploy.

Even the evaluation metrics were mechanical. SHAP scores and LIME for explainability, acronyms that stand for “SHapley Additive exPlanations” and “Local Interpretable Model-agnostic Explanations.”

For text generation, ROUGE (Recall-Oriented Understudy for Gisting Evaluation) and BLEU (Bilingual Evaluation Understudy) were early metrics that simply counted overlapping word sequences between a model’s output and its reference text. ROUGE measured recall: how much of the original was captured. BLEU measured precision: how much of the output matched the reference.

I tend to forget their full names, so I remember them in French instead. ROUGE and BLEU feel like two temperaments of understanding. ROUGE, red, is generous and remembering; capturing as much of the original as possible. BLEU, blue, is calm and precise; saying only what can be verified.

Look at today's AI language: training, attention, memory, hallucination, alignment.

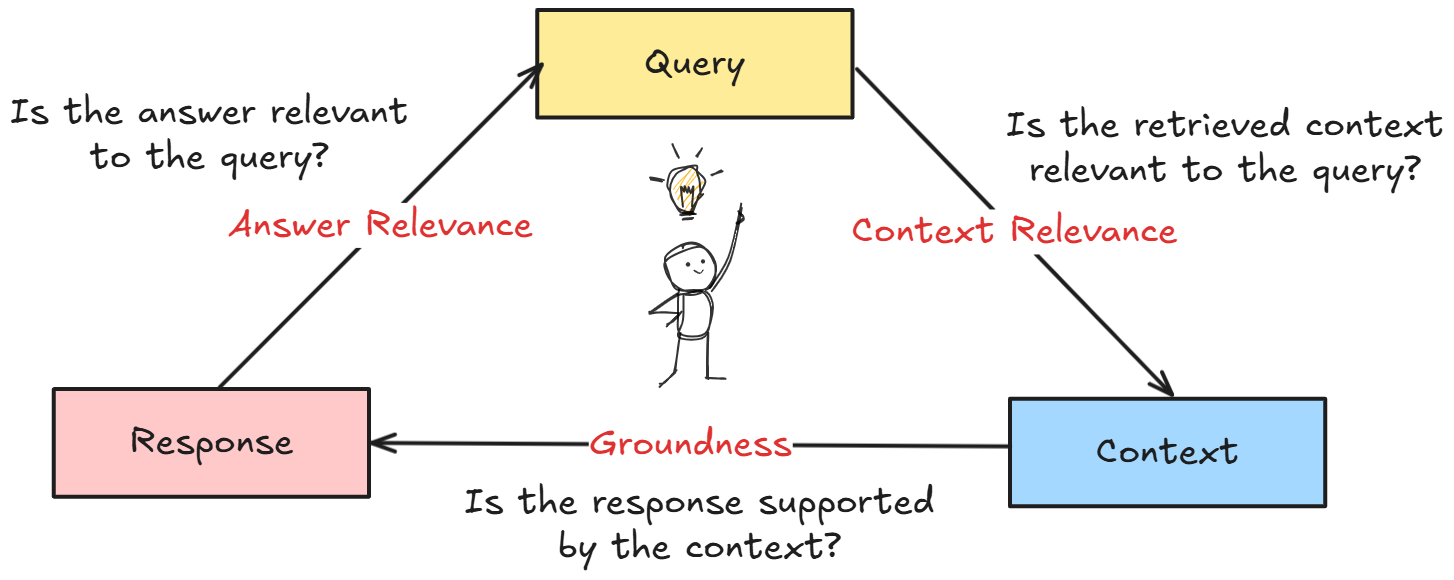

And look at how we evaluate these systems now. We don't just run BLEU scores anymore. We use “LLM-as-a-Judge.” We literally ask one language model to evaluate another language model's performance to assess relevance, accuracy, tone, groundedness. We've moved from counting tokens to asking for opinions. The language has grown human. And that changes how we relate to it.

When a regression model’s output is poor, we call it “overfitting” or “high error rate.” But when GPT-4 invents a fake citation? We say it “hallucinated.” That word carries weight. It implies a mind producing false perceptions.

Academic research is a part of the shift

And probably has been for some time…

Some memorable examples of poetic naming in research:

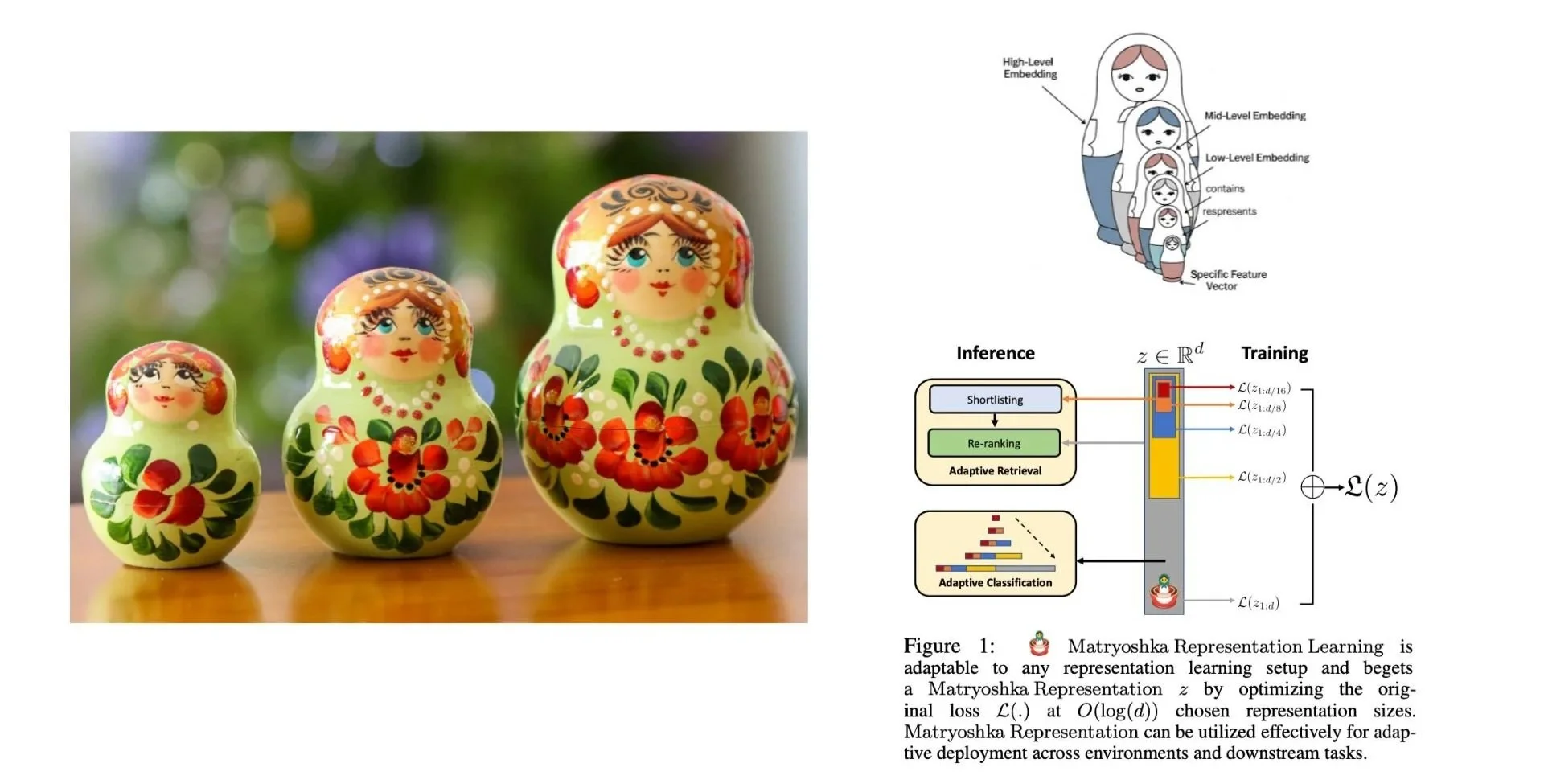

Matryoshka Representation Learning (paper): Matryoshka are Russian nesting dolls where each doll contains a smaller version inside. It is a playful, tactile metaphor used in Google and OpenAI models. It transforms a technical optimization (adaptive embedding sizes) into something you can visualize and touch. The vocabulary shifted from “we're compressing data efficiently” to “we're revealing layers of understanding, like opening nested dolls.”

Michelangelo (paper): The name reframes what the model does: not extracting data, but revealing understanding by chiseling away irrelevant information. It's sculptural language, the intelligence was always there in the marble (context), you just need to remove what doesn't belong.

Prometheus (paper): Known as a Greek Titan who gave fire (knowledge/technology) to humanity. The name suggests this evaluator isn't just scoring outputs; it's democratizing the ability to judge AI quality (bringing evaluation "fire" from proprietary models like GPT-4 to the open-source community).

Stochastic Parrots (paper): I found this one quite funny. “Stochastic” (random/probabilistic) + “Parrots” (mimicry without comprehension) suggests these models don't understand, they just repeat patterns. Debatable. The vocabulary shifted from neutral technical description to moral critique, implying something about consciousness, intentionality, and the difference between performance and genuine understanding.

Why this matters

Here's the thing: the general public doesn't panic about logistic regression, even though it's been denying loan applications and flagging resumes for years. I haven't read many articles about the existential threat of support vector machines... We understand those systems as tools.

We reserve our anxiety for the systems we've named like living things.

When you call something a “decision tree,” you debug it. When you call it an “agent,” you assume it can be toxic, can hallucinate, needs to recall, or even dream.

This matters because naming is the first act of design. Once we call something a Copilot, an Agent, a Judge, we've already encoded a set of expectations about autonomy, responsibility, and relationship. Right now, we're in the middle of a linguistic shift and have moved from naming systems after what they compute to naming them after what they seem to be.

The names we choose today will determine the questions we ask tomorrow. Not just “does it work?” but “can we trust it?”

We're not just building AI. We're building the language we'll use to live with it.

This piece is part of Ode by Muno, where I explore the invisible systems shaping how we sense, think, and create.

📬 This essay is also available on Substack, where I send new pieces directly to your inbox. Subscribe to get essays like this before they're archived here.

I'm curious — what names have you noticed changing in your field? Not just AI, but anywhere. Leave a comment with what resonated, or what you're seeing in your own work. Share this with someone who thinks about language and technology this way. And if you want to follow this conversation as it evolves, subscribe to get new pieces as I write them.

The quote at the intro is from the book, Systems Intelligence.

In my next post, I'll trace how naming philosophy evolved from 2017 to today (from AlphaZero to Agents to Superhuman) and why each name encoded a different vision of what AI could be.