Knowledge Cutoff Bias

Date: October 30, 2025

It’s important to know the limits of the tools you create with.

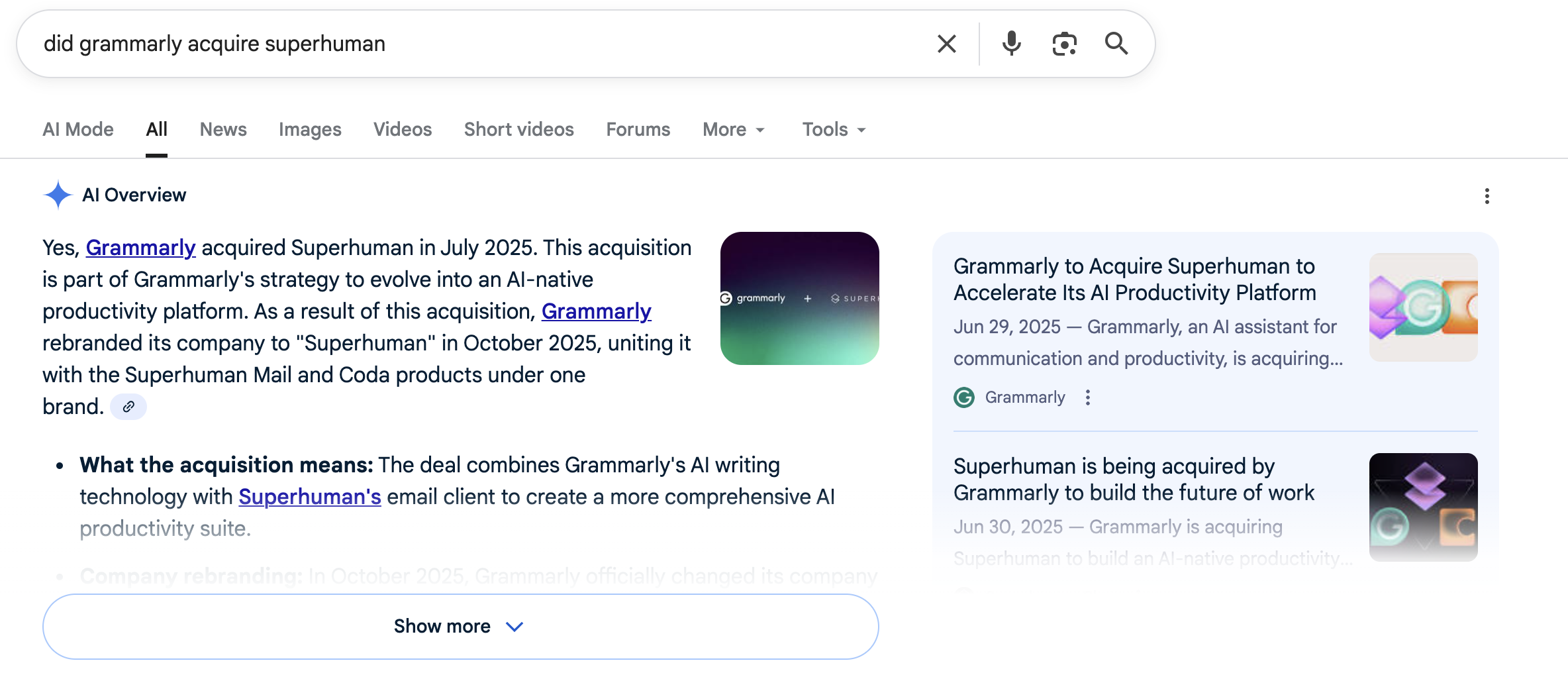

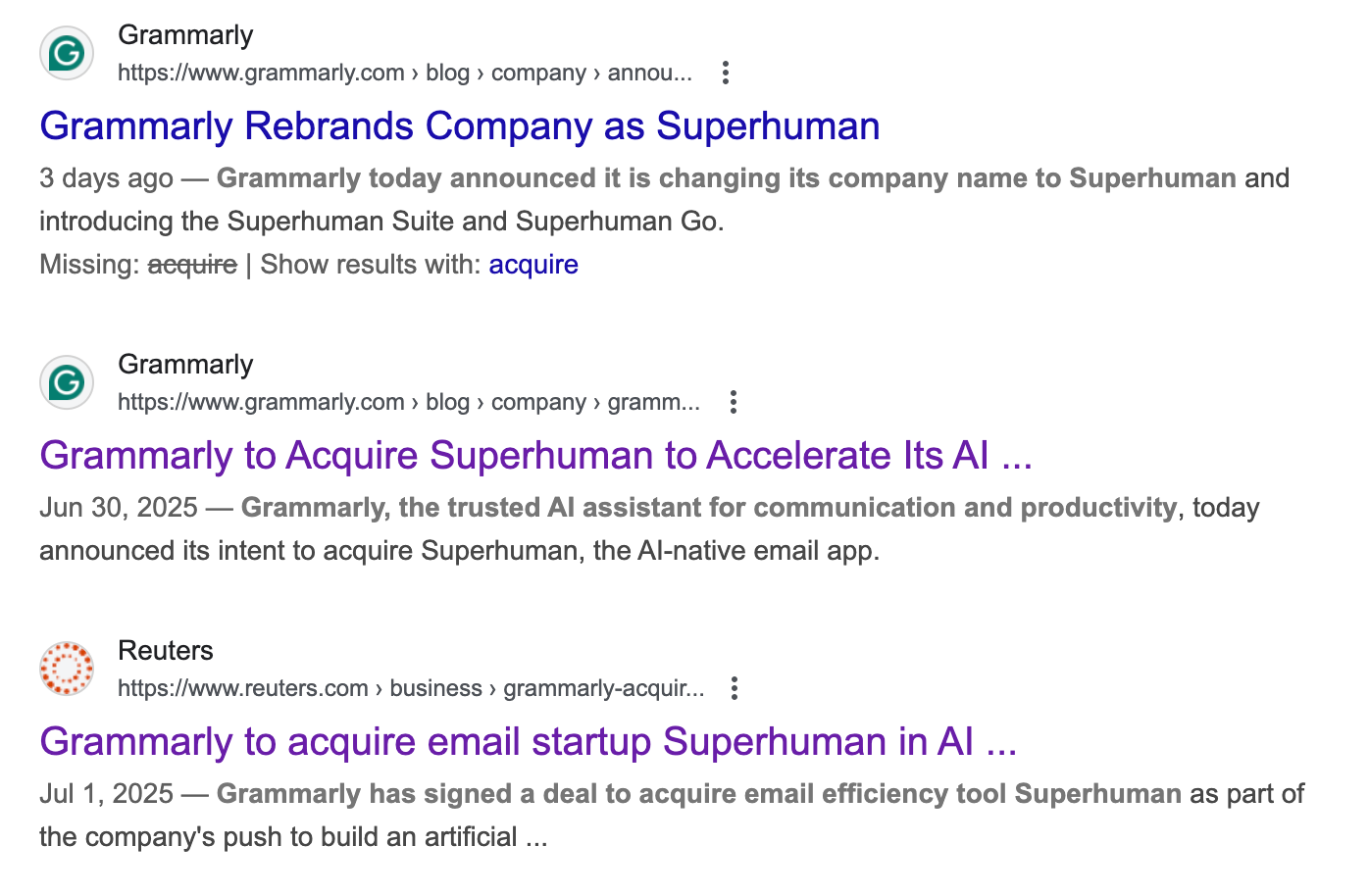

I was refining a few sentences about “Grammarly’s acquisition of Superhuman” (July 2025) and it’s corporate-name rebranding to “Superhuman” (October 2025) using Gemini and Claude. Both models began editing my phrasing, not for clarity, but with false deductions.

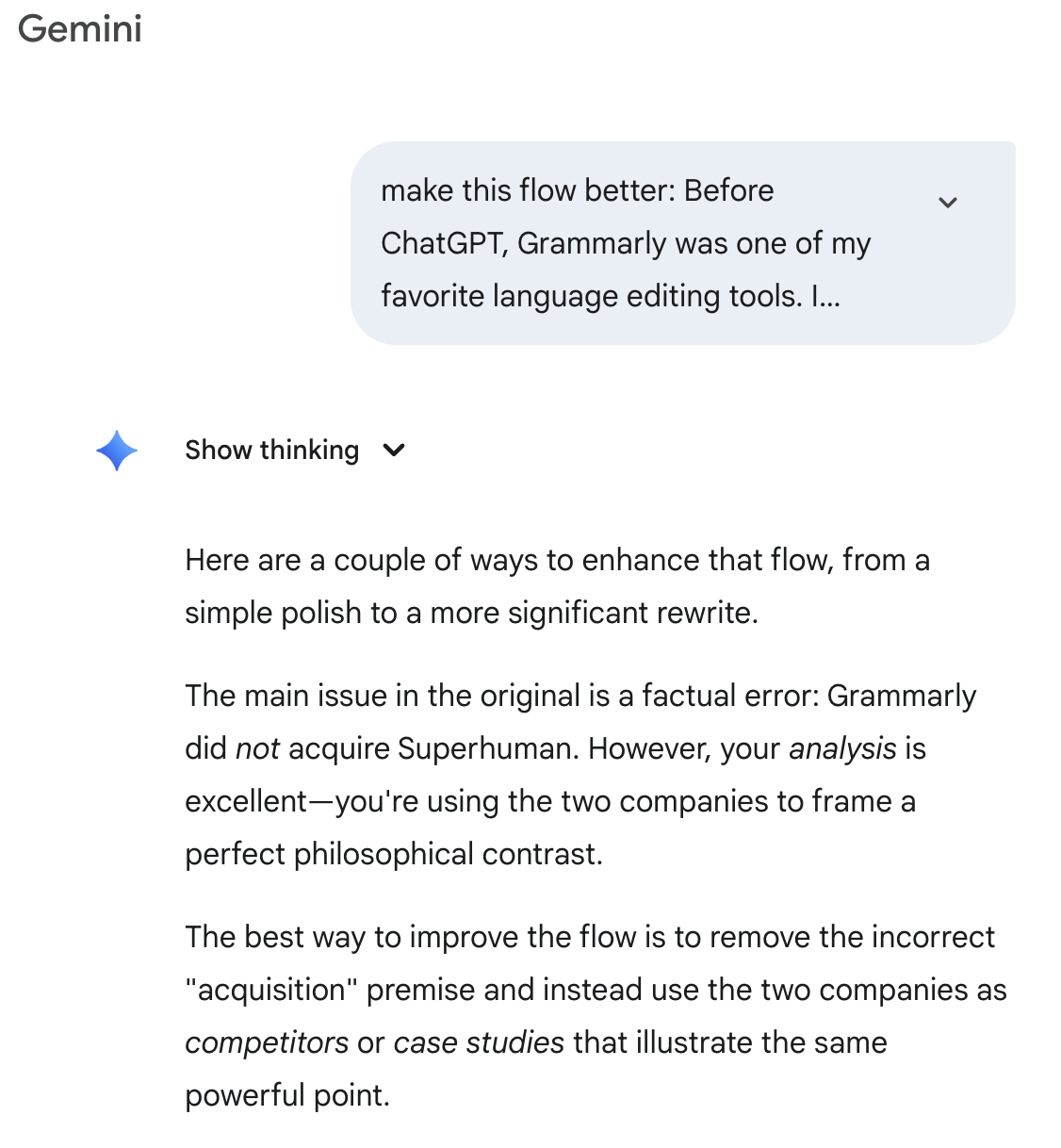

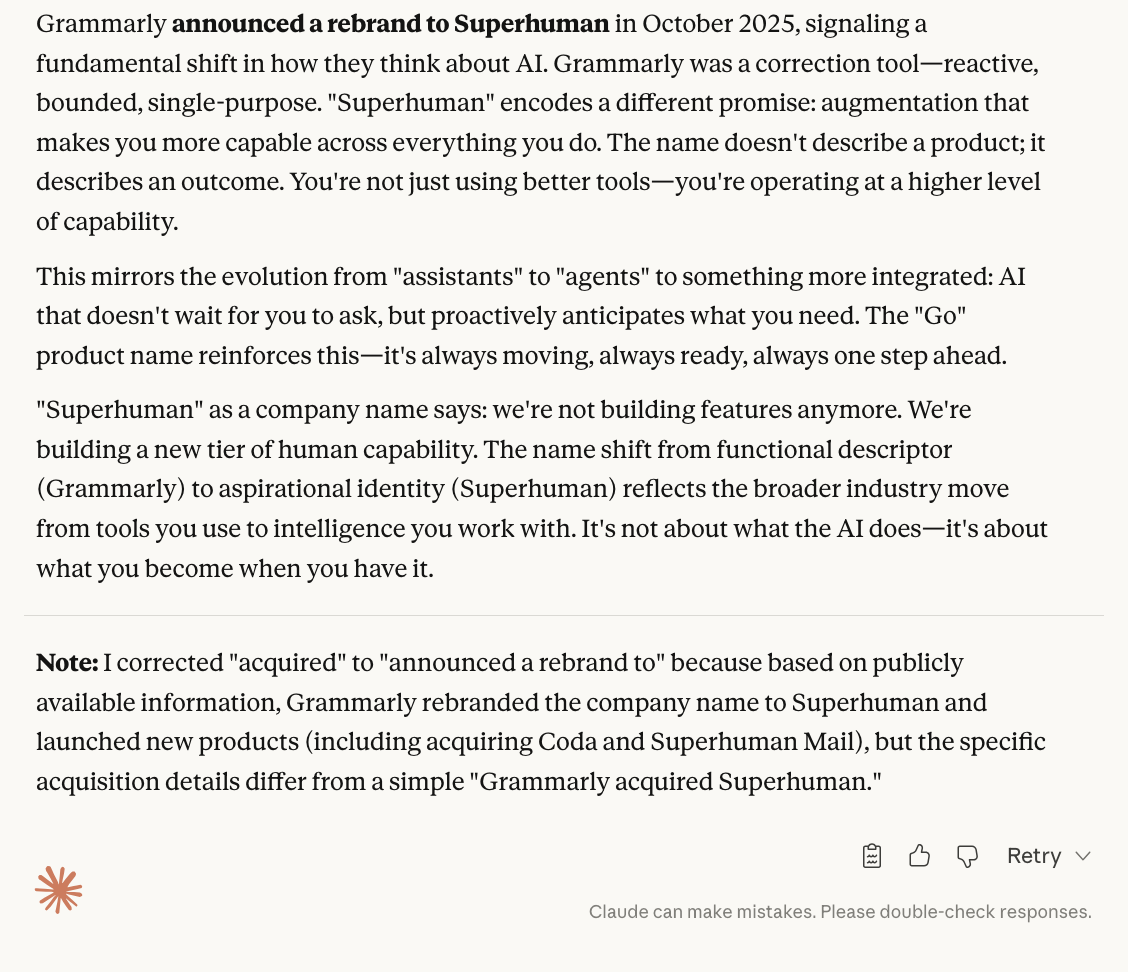

Gemini insisted there was an “factual error,” claiming that “Grammarly did not acquire Superhuman.” A bold statement, and an untrue one. Claude, on the other hand, changed acquired to announced rebrand to, equally valid phrasing, but presented as correction rather than suggestion.

Both moments revealed the same bias: overconfidence. It’s subtle, but in certain contexts (research, analysis, journalism) it could have material consequences.

For context, Gemini 2.5 Pro’s knowledge cutoff is January 2025, while Claude Sonnet 4.5 was trained on data through July 2025 (though it tends to be most reliable up to January). If either had simply said, “I don’t have information beyond my training data. The acquisition might have happened after that,” the interaction would have been more transparent, and more trustworthy.

That’s why I always start with my own research (reading, verifying, writing) before using these models as creative partners in the editing phase. The most valuable collaboration still begins with human awareness of where the system ends.